I stumbled across this example while looking for specimen records for the genus Rafflesia, which are parasitic plants famous for the spectacular size of their flowers (up to 1m across).

The GBIF map for Rafflesia shows a few outliers. Unfortunately GBIF doesn't make it easy to drill down (why oh why can't we just click on the map and see the corresponding occurrences?) so I opened the map in iSpecies and clicked on each outlier in turn. The one in Vanuatu (438164267 from the Paris museum P00577336) is identified to genus level only and has the note:

Parasite terrestre, grande fleur orange au ras du sol. Incomplète suite à prédation. Très forte odeur désagréable. Récolté par Sylvain Hugel (photo) (alcool seul)which Google translates as:

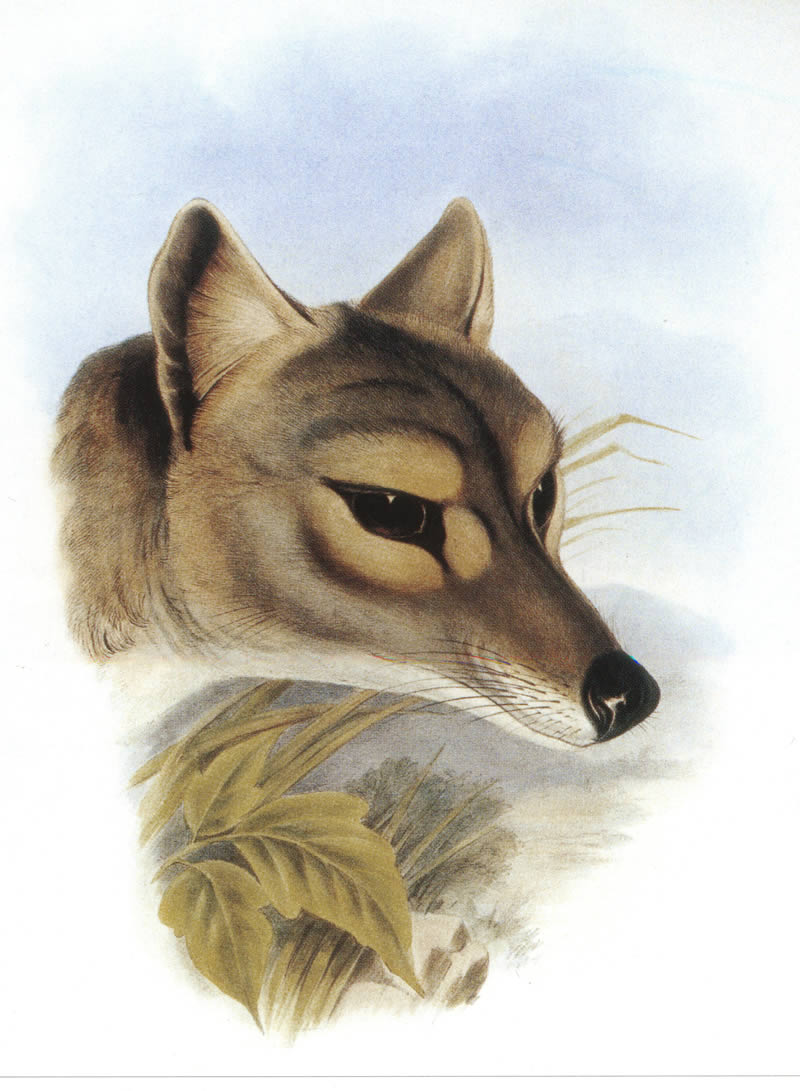

Terrestrial parasite, large orange flower at ground level. Incomplete due to predation. Very strong unpleasant odor. Collected by Sylvain Hugel (photo) (alcohol only)Sounds a bit like Rafflesia but there's no photo or other information available online. Likewise there's no additional data for the record from Brazil (1090499968). There is a record from Madagascar that is accompanied by a photo, but it that looks nothing like Rafflesia (1261055923):

That leaves two records, 2018528337 (Rafflesia cantleyi) from off the coast of Peru, and 2014813273 (Rafflesia) off the coast of Australia. Both of these records are metagenomic. For example, occurrence 2018528337 is part of a dataset Amplicon sequencing of Tara Oceans DNA samples corresponding to size fractions for protists that, on the face of it, would be an unlikely source of occurrences of forest dwelling plants.

What we get in the GBIF occurrence record is a link to the pipeline used to generate the data (Pipeline version 4.1 - 17-Jan-2018), the sample (ERS491947), and an analysis (MGYA00167469) that summarises all the taxonomic data from the ocean water sample.

What we don't get in GBIF is an obvious way to try and figure out why GBIF thinks that large flowers live in the ocean. I followed the links from MGYA00167469 and downloaded a bunch of files, some in familiar formats (FASTA), others in formats I'd not seen before (e.g., HDF5). From the mapseq file we have the following line:

ERR562574.2494029-BISMUTH-0000:2:112:3465:9749-2/89-1 GFBU01000011.4303.6106 76 0.9634146094322205 79 3 0 6 88 1722 1804 + sk__Eukaryota;k__Viridiplantae;p__Streptophyta;c__;o__Malpighiales;f__Rafflesiaceae;g__Rafflesia;s__Rafflesia_cantleyi

This tells us that sequence ERR562574.2494029-BISMUTH-0000:2:112:3465:9749-2/89-1 matches GenBank sequence GFBU01000011, which is "Rafflesia cantleyi RC_11 transcribed RNA sequence" from a paper on flower development in Rafflesia cantleyi doi:10.1371/journal.pone.0167958. So, now we see why we think we have giant flowers off the coast of Peru.

The rest of the line has information on the match: the oceanic sequence has a 0.96 identity with the plant sequence, has 79 matches, 3 mismatches, and no gaps, which suggests that this is a short sequence. Going digging in the FASTA file I found the raw sequence, and it is indeed very short:

>ERR562574.2494029-BISMUTH-0000:2:112:3465:9749-2/89-1 GTCTAAGTGTCGTGAGAAGTTCGTTGAACCTGATCATTTAGAGGAAGTAGAAGTCGTAAC AAGGTTTCCGTAGGTGAACCTGCGGAAGG

This short string is the evidence for Rafflesia in the ocean. Out of curiosity I ran this sequence through BLAST:

Query 1 GTCTAAGTGTCGTGAGAAGTTCGTTGAACCTGATCATTTAGAGGAAGTAGAAGTCGTAAC 60

|||||||||||||| |||||||||||||||| ||||||||||||||| ||||||||||||

Sbjct 1683 GTCTAAGTGTCGTGGGAAGTTCGTTGAACCTTATCATTTAGAGGAAGGAGAAGTCGTAAC 1742

Query 61 AAGGTTTCCGTAGGTGAACCTGCGGAAGG 89

|||||||||||||||||||||||||||||

Sbjct 1743 AAGGTTTCCGTAGGTGAACCTGCGGAAGG 1771

The top hit is Bathycoccus prasinos, a picoplanktonic alga with a world-wide distribution. This seems like a more plausible identification for this sequence (all the top 100 hits are very similar to each other, many are labelled as Bathycoccus).

So, there's something clearly amiss with the analysis of this dataset. Someone who knows more about metagenomics than I do will be better placed to explain why this pipeline got it so wrong, and how common this issue is.

Given the scale and automation of metagenomics, there will always be errors - that is inevitable. What we need is ways to catch those errors, especially ones that are going to "pollute" existing distribution data with spurious records (flowers in the ocean). And in a sense, GBIF excels at this in that it exposes data to a wider audience. If you work on marine microbiology you might not notice that your sequences apparently include forest plants, but if you work on forest plants you will almost certainly notice sequences occurring in the ocean.

A key feature of GBIF that makes it so useful is that, unlike many data repositories, it does not treat data as a "black box". GBIF is not like a library catalogue which merely tells you that they have books and where to find them, instead it is like Google Books, which can tell you which books contain a given phrase you are looking for. By opening up each dataset and indexing the contents, GBIF enables us to do data analysis (in much the same way that GenBank isn't just a catalogue of sequences, it enables you to search the sequences themselves).

This is a feature we risk losing if we treat metagenomics data as a black box. The Tara Oceans data that GBIF receives is simply a list of taxa at a locality, it's a checklist. We have to take it on trust that the taxonomic assignments are accurate, and it is not a trivial task to diagnose errors. Compare this to having the photo that accompanied the record from Madagascar, which helps us determine that the identification is wrong. Going forward, it would be helpful if we had metagenomic sequences available as part of the data download from GBIF. It's also worth considering whether GBIF should start doing its own analysis of sequence data, or asking its contributors to check that their taxonomic assignments are correct (e.g., running BLAST on the data). Otherwise GBIF users may end up having to filter their data for a growing number of completely spurious records.

Update

Looks like (occurrence 1261055923) is Langsdorffia:

Related to the blog post https://t.co/do5P2u5Ooj, does anyone have ideas on the identity of this species (@GBIF record https://t.co/zSr8xemkh7 from Madagascar) which is labelled "Rafflesia" pic.twitter.com/qiRtYH0tUh— Roderic Page (@rdmpage) December 20, 2019