Last week I attended the Wikipedia Science Conference (hashtag: #wikisci) at the Wellcome Trust in London. it was an interesting two days of talks and discussion. Below are a few random notes on topics that caught my eye.

What is Wikidata?

A recurring theme was the emergence of

Wikidata, although it never really seemed clear what role Wikidata saw for itself. On the one hand, it seems to have a clear purpose:

Wikidata acts as central storage for the structured data of its Wikimedia sister projects including Wikipedia, Wikivoyage, Wikisource, and others.

At other times there was a sense that Wikidata wanted to take any and all data, which it doesn't really seemed geared up to do. The English language Wikipedia has nearly 5 million articles, but there are lots of scientific databases that dwarf that in size (we have at least that many taxonomic names, for example). So, when Dario Taraborelli suggests building a repository of all citations with Wikidata, does he really mean ALL citations in the academic literature? CrossRef alone has 75M DOIs, whereas Wikidata currently has 14.8M pages, so we are talking about greatly expanding the size of Wikidata with just one type of data.

The sense I get is that Wikidata will have an important role in (a) structuring data in Wikipedia, and (b) providing tools for people to map their data to the equivalent topics in Wikipedia. Both are very useful goals. What I find less obvious is whether (and if so, how) Wikidata aims to be a more global database of facts.

How do you Snapchat? You just Snapchat

As a relative outsider to the Wikipedia community, and having had a sometimes troubled experience with Wikipedia, it struck me that how opaque things are if your are an outsider. I suspect this is true of most communities, if you are a member then things seem obvious, if you're not, it takes time to find out how things are done. Wikipedia is a community with nobody in charge, which is a strength, but can also be frustrating. The answer to pretty much any question about how to add data to Wikidata, how to add data types, etc. was "ask the community". I'm reminded of the American complaint about the European Union "if you pick up the phone to call Europe, who do you call?". In order to engage you have to invest time in discovering the relevant part of the community, and then learn engage with it. This can be time consuming, and is a different approach to either having to satisfy the requirements of gatekeepers, or a decentralised approach where you can simply upload whatever you want.

Streams

It seems that everything is becoming a stream. Once the volume of activity reaches a certain point, people don't talk about downloading static datasets, but instead of consuming a stream of data (very like the

Twitter firehose). The volume of Wikipedia edits means scissile scientists studying the growth of Wikipedia are now consuming streams. Geoffrey Bilder of CrossRef showed some interesting visualisations of real-time streams of DOIs being as users edited Wikipedia pages

CrossRef DOI Events for Wikimedia, and Peter Murray-Rust of

ContentMine seemed to imply that ContentMine is going to generate streams of facts (rather than, say, a query able database of facts). Once we get to the stage of having large, transient volumes of data, all sorts of issues about reanalysis and reproducibility arise.

CrossRef and evidence

One of the other strking visualisations that CrossRef have is the

DOI Chronograph, which displays the numbers of CrossRef DOI resolutions by the domain of the hosting web site. In other words, if you are on a Wikipedia page and click on a DOI for an article, that's recorded as a DOI resolution from the domain "wikipedia.org". For the period 1 October 2010 to 1 May 2015 Wikipedia was the source of 6.8 million clicks on DOIs, see

http://chronograph.labs.crossref.org/domains/wikipedia.org. One way to interpret this is that it's a measure of how many people are accessing the primary literature - the underlying evidence - for assertions made on Wikipedia pages. We can compare this with results for, say, biodiversity informatics projects. For example,

EOL has 585(!) DOI clicks for the period 15 October 2010 to 30 April 2015. There are all sorts of reasons for the difference between these two sites, such as Wikipedia has vastly more traffic than EOL. But I think it also reflects the fact that many Wikipedia articles are richly referenced with citations to the primary literature, and projects like EOL are very poorly linked to that literature. Indeed, most biodiversity databases are divorced from the evidence behind the data they display.

Diversity and a revolution led by greybeards

"Diversity" is one of those words that has become politicised, and attempts to promote "diversity" can get awkward ("let's hear from the women", that homogeneous category of non-men). But the aspect of diversity that struck me was age-related. In discussions that involved fellow academics, invariably they looked a lot like me - old(ish), relatively well-established and secure in their jobs (or post-job). This is a revolution led not by the young, but by the greybeards. That's a worry. Perhaps it's a reflection of the pressures on young or early-stage scientists to get papers into high-impact factor journals, get grants, and generally play the existing game, whereas exploring new modes of publishing, output, impact, and engagement have real risks and few tangible rewards if you haven't yet established yourself in academia.

But if we simply shuffle the order of the taxa we can’t generate all the trees. However, if we remember that we also have the internal nodes, then there is a simple way we can generate the trees.

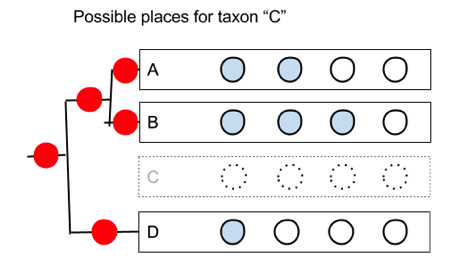

But if we simply shuffle the order of the taxa we can’t generate all the trees. However, if we remember that we also have the internal nodes, then there is a simple way we can generate the trees.  When we draw a tree each row corresponds to a node. The gap between each pair of leaves (the taxa A,B,D) corresponds to the an internal nodes. So we could divide the drawing up into “hit zone”, so that if you drag the taxon we’re adding (“C”) onto the zone centred on a leaf, we add the taxon below that leaf; if we drag it onto a zone between two leaves, we attach it below that the corresponding internal node. From the user’s point of view they are still simply sliding taxa up and down, but in doing so we can create each of the possible trees.

When we draw a tree each row corresponds to a node. The gap between each pair of leaves (the taxa A,B,D) corresponds to the an internal nodes. So we could divide the drawing up into “hit zone”, so that if you drag the taxon we’re adding (“C”) onto the zone centred on a leaf, we add the taxon below that leaf; if we drag it onto a zone between two leaves, we attach it below that the corresponding internal node. From the user’s point of view they are still simply sliding taxa up and down, but in doing so we can create each of the possible trees.

Google knows how many species there are. More significantly, it knows what I mean when I type in "how many species are there". Wouldn't it be nice to be able to do this with biodiversity databases? For example, how many species of insect are found in Fiji? How would you answer this question? I guess you'd Google it, looking for a paper. Or you'd look in vain on GBIF, and then end up hacking some API queries to process data and come up with an estimate. Why can't we just ask?

Google knows how many species there are. More significantly, it knows what I mean when I type in "how many species are there". Wouldn't it be nice to be able to do this with biodiversity databases? For example, how many species of insect are found in Fiji? How would you answer this question? I guess you'd Google it, looking for a paper. Or you'd look in vain on GBIF, and then end up hacking some API queries to process data and come up with an estimate. Why can't we just ask? All very sensible questions that existing biodiversity databases would struggle to answer.

All very sensible questions that existing biodiversity databases would struggle to answer. Over the weekend, out of the blue, Dan Whaley commented on an earlier blog post of mine (

Over the weekend, out of the blue, Dan Whaley commented on an earlier blog post of mine (

A little over a week ago I was at the

A little over a week ago I was at the