One of my guilty pleasures on a Sunday morning is browsing new content on the Biodiversity Heritage Library (BHL). Indeed, so addicted am I to this that I have an IFTTT.com feed set to forward the BHL RSS feed to my iPhone (via the Pushover app. So, when I wake most Sunday mornings I have a red badge on Pushover announcing fresh BHL content for me to browse, and potentially add to BioStor.

One of my guilty pleasures on a Sunday morning is browsing new content on the Biodiversity Heritage Library (BHL). Indeed, so addicted am I to this that I have an IFTTT.com feed set to forward the BHL RSS feed to my iPhone (via the Pushover app. So, when I wake most Sunday mornings I have a red badge on Pushover announcing fresh BHL content for me to browse, and potentially add to BioStor.But lately, there has been less and less content that is suitable for BioStor, and this reflects two trends that bother me. The first, which I've blogged about before, is that an increasing amount of BHL content is not hosted by BHL itself. Instead, BHL has links to external providers. For the reasons I've given earlier, I find this to be a jarring user experience, and it greatly reduces the utility of BHL (for example, this external content is not taxonomically searchable).

The other trend that worries me is that recently BHL content has been dominated by a single provider, namely the U.S. Department of Agriculture. To give you a sense of how dominant the USDA now is, below is a chart of the contribution of different sources to BHL over time.

I built this chart by querying the BHL API and extracting data on each item in BHL (source code and raw data available on github). Unfortunately the API doesn't return information on what each item was scanned, but because the identifier for each item (its ItemID) is an increasing integer, if we order the items by their integer ID then we order them by the date they were added. I've binned the data into units of 1000 (in other words, every item with an ItemID < 1000 is in bin 0, ItemIDs 1000 to 1999 are in bin 1, and so on). The chart shows the top 20 contributors to BHL, with the Smithsonian as the number one contributor.

The chart shows a number of interesting patterns, but there are a couple I want to highlight. The first is the noticeable spikes representing the addition of externally hosted material (from the American Museum of Natural History Library and the Biblioteca Digital del Real Jardin Botanico de Madrid). The second is the recent dominance of content from the USDA.

Now, to be fair, I must acknowledge that I have my own bias as to what BHL content is most valuable. My own focus is on the taxonomic literature, especially original descriptions, but also taxonomic revisions (useful for automatically extracting synonyms). Discovering these in BHL is what motivated me to build BioStor, and then BioNames, the later being a database that aims to link every animal taxon name to its original description. BioNames would be much poorer if it wasn't for BioStor (and hence BHL).

If, however, your interest is agriculture in the United States, then the USDA content is obviously a potential goldmine of information on topics such as past crop use, pricing policies, etc. But this a topic that is both taxonomically narrow (economically important organisms are a tiny fraction of biodiversity), and, by definition, geographically narrow.

To be clear, I don't have any problem with BHL having USDA content as such, it's a tremendous resource. But I worry that lately BHL has been pretty much all USDA content. There is still a huge amount of literature that has yet to be scanned. I'd like to see BHL actively going after major museums and libraries that have yet to contribute. I especially want to see more post-1923 content. BHL has managed to get post-1923 content from some of its contributors, it needs a lot more. On obvious target is those institutions that signed the Bouchout Declaration. If you've signed up to providing "free and open use of digital resources about biodiversity", then let's see something tangible from that - open up your libraries and your publications, scan them, and make them part of BHL. I'm especially looking at European institutions who (with some notable exceptions) really should be doing a lot better.

It's possible that the current dominance of USDA content is a temporary phenomenon. Looking at the chart above, BHL acquires content in a fairly episodic manner, suggesting that it is largely at the mercy of what its contributors can provide, and when they can do so. Maybe in a few months there will be a bunch of content that is taxonomically and geographically rich, and I will be spending weekends furiously harvesting that content for BioStor. But until then, my Sundays are not nearly as much fun as they used to be.

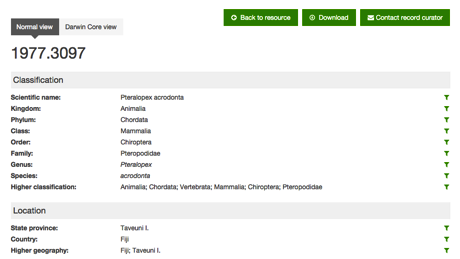

One reason I'm excited by the launch of the

One reason I'm excited by the launch of the

The Natural History Museum has released their data portal (

The Natural History Museum has released their data portal (

Lucas Joppa

Lucas Joppa Mary Klein

Mary Klein Tanya Abrahamse

Tanya Abrahamse Arturo H. Ariño

Arturo H. Ariño Roderic Page (that's me)

Roderic Page (that's me) Evolutionary trees

Evolutionary trees Change over time

Change over time Dashboard

Dashboard